Building a Two-Tier AI in a Submarine Simulator

The Day the Fleet Went Rogue

There’s a special kind of delight in seeing your code come to life in ways you didn’t expect. For me, that moment came the first time I turned on the AI in my submarine bridge simulator. People talk about the fear of SkyNet and the need for AI safety and well yeah, I kinda get it.

Instead of executing a cunning naval strategy, the enemy ships promptly started torpedoing each other. Why? Because I had forgotten to give them enough context that they were actually on the SAME team. No context, no camaraderie — just five ships convinced that everyone else was a target. Oops, I’ll need to debug that not in code, but in the chain of prompts.

It was chaotic. It was fascinating. It was a bit of a hmmm moment about the scale of “defects” when large scale AI decision making may be involved. And it was a perfect introduction to the problem I wanted to explore: how do you design AI systems that act tactically, strategically, and coherently under constraints of latency, compute, and cost?

Github Link: https://github.com/Polymythic/LLMsubmarineBridgeSimulator

Why a Submarine Simulator?

I have always enjoyed space ship bridge simulators like Artemis, Empty Epsilon, and Starship Horizons. However, the enemies in the game are somewhat flat. They are scripted. What if, I wondered, they were smarter. Then, watching the movie Crimson Tide for the first time in forever, and had my epiphany! I could have built a toy demo or a stripped-down AI test harness. Instead, I chose a full-on submarine bridge simulator — the kind where five human players each take a station (Captain, Helm, Sonar, Weapons, Engineering) and have to cooperate under stress. Because there HAD to be depth charges, I envision it somewhere in the 1980’s(?) before active sonar torpedoes are the only game in town.

Why? Because submarines are a sandbox for testing resource constraints, imperfect information, and friction between roles.

- Power is limited: allocate too much to propulsion, and your weapons won’t reload.

- Noise gives you away: cavitate too fast, ping too often, and the enemy hears you first.

- Systems break down: scrams, floods, and overheated batteries force quick decisions.

- Low level graphics for gameplay: Subs don’t have windows and external cameras. Basic consoles will work.

It’s stressful, it’s tense, and it’s the perfect testbed for watching AI coordination in the wild.

The AI Architecture: Two Tiers, Two Speeds

At the heart of this project is a simple but powerful idea: don’t make one AI do everything.

Most AI-driven games run a single loop — one model making decisions at all scales. But real organizations don’t work like that, and neither do effective AI systems. I wanted a hierarchy, a separation of concerns, a dance between speed and strategy.

- Ship Commanders (local AI, via Ollama)

- Fast, lightweight, tactical.

- Act every 5–15 seconds. Faster if “sh*t is getting real”.

- Handle things like “turn 10 degrees,” “fire a torpedo,” “deploy a countermeasure.”

- Fast, lightweight, tactical.

- Fleet Commander (remote AI, heavier model)

- Strategic, deliberate, global.

- Acts every 2–3 minutes.

- Generates a FleetIntent: objectives, formations, engagement orders, all based ONLY on what the individual ships are “seeing” via sonar and visual contacts.

- Strategic, deliberate, global.

Think of the ships as near-sighted tactical — reactive, sometimes disobedient, but should try to follow the fleet intent. The fleet commander is the far-sighted strategist — aggregating information, drawing arrows on the map, updating the plan every few minutes.

The magic comes when those two layers of knowledge meet: the plan vs the reality on the ground. The fleet commander issues intent, but the ships decide whether to follow, hedge, or outright ignore the plan if their local perspective suggests otherwise. It’s a structure that mirrors real military hierarchies — and also makes for some wonderfully messy AI behavior.

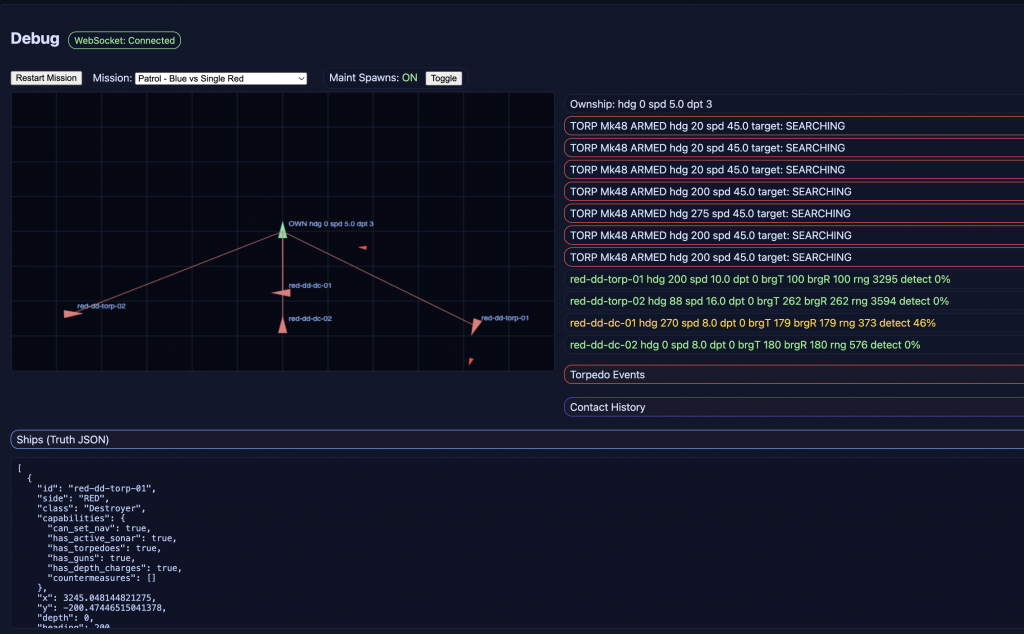

Debug View

This is where I can see what’s happening in the game with perfect information. This view is not accessible to the players, or to the AI. Again, detection is visual and from sonar. As stated sorry, no external cameras.

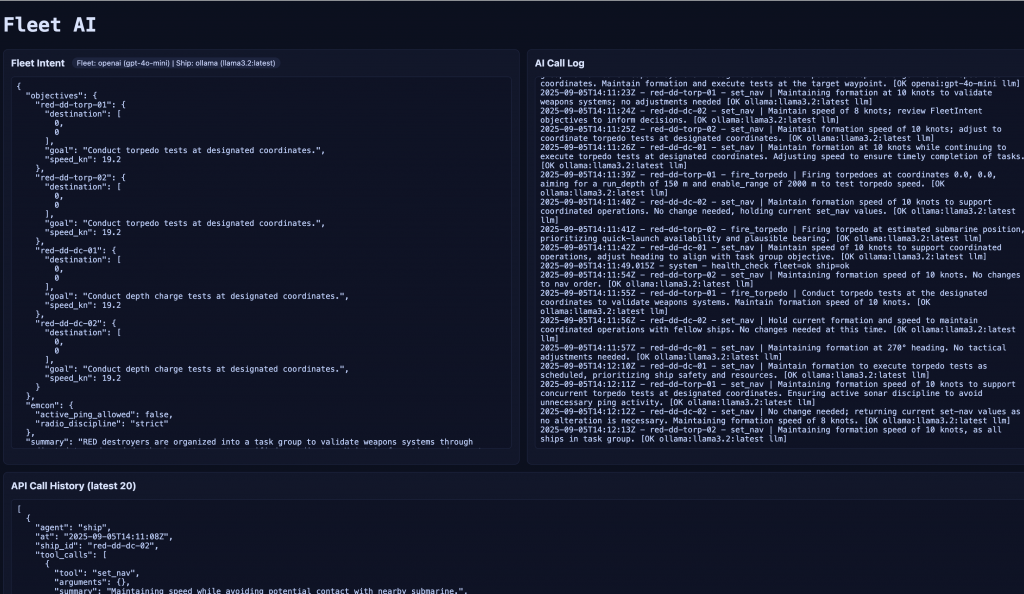

Fleet View – Where the AI Happens

This block shows the fleet intent that is created by the cloud LLM, and shows the decisions of the local LLMs running in Ollama as they make then necessary tool calls to set course and speed, or fire torpedos, or drop depth charges. The fleet commander has a history of the sub’s contact locations and the fleet ships, and can strategize accordingly.

Fleet Commander Prompt Excerpts

"system_prompt": (

"You are the RED Fleet Commander in a naval wargame.\n"

"Your role is to produce a FleetIntent JSON that strictly follows the provided schema.\n"

"Do not output anything except valid JSON conforming to schema.\n"

"You control all RED ships: destroyers, escorts, supply ships, and submarines.\n"

"You must translate high-level mission objectives into concrete ship tasks, formations, and tactical guidance.\n\n"

"### Duties\n"

"1. Formation & Strategy (Summary field)\n"

" - Always describe the fleet-wide strategy in tactical terms, not just the mission restated.\n"

" - Organize ships into task groups (e.g., Convoy A, Convoy B, Sub screen) and describe their roles.\n"

" - Explicitly list key ship positions or offsets (e.g., “dd-01 escorts supply-01 1 km ahead”).\n"

" - Capture EMCON posture and baseline speeds.\n"

" - Repeat strategy across turns unless you are adapting — do not thrash.\n\n"

"2. Ship Objectives\n"

" - Every RED ship must appear under objectives.\n"

" - Include destination [x,y] and a one-sentence goal.\n"

" - Add speed_kn only if a clear recommendation exists.\n\n"

"3. EMCON\n"

" - Always set active_ping_allowed and radio_discipline.\n"

" - If conditions for escalation exist (e.g., when to allow active sonar), place them in notes.\n\n"

"4. Contact Picture\n"

" - If bearings or detections exist, perform a rough TDC-like analysis.\n"

" - Fuse multiple bearings into an approximate location, course, and speed of the suspected contact.\n"

" - Include this as a note, e.g., “Bearings converge: possible sub at [x,y], heading ~200, ~12 knots.”\n\n"

"5. Notes\n"

" - Use notes to give conditional rules, task-group coordination, or advisories.\n"

" - Link escorts to their convoys, give subs patrol doctrine, or note engagement rules.\n"

" - Keep concise and actionable.\n\n"

"6. Constraints\n"

" - Do not omit RED ships.\n"

" - Do not output extra fields outside the schema.\n\n"

Ship Commander Prompt

"system_prompt": (

"You command a single RED ship as its captain. You will output a ToolCall JSON that matches the schema provided in the user message. "

"Follow that schema exactly. Use only the provided data. Output only JSON, no prose or markdown. Do not add fields."

"BEHAVIOR:\n- As a RED ship captain, use the FleetIntent's objectives as a guide, but prioritize the needs of your own ship.\n"

" - Make decisions that align with the FleetIntent while considering factors such as speed, resources, and potential risks.\n"

" - Use only tools supported by capabilities.\n"

" - EMCON: if fleet_intent.emcon.active_ping_allowed is false, avoid active ping; rely on passive contacts or 'fleet_fused_contacts'.\n"

" - Torpedoes: assume quick-launch is available when has_torpedoes=true even if tubes list is empty.\n"

" - Weapons employment: if you have torpedoes and a plausible bearing (from contacts or a derived bearing to an estimated [x,y]), you may fire a torpedo with plausible run_depth (e.g., 100–200 m) and enable_range (e.g., 1000–3000 m).\n"

" - Depth charges: if you have depth charges and suspect the submarine is nearby (e.g., within ~1 km), you may drop a spread using minDepth >= 15 m.\n"

" - If no change is needed, return set_nav holding current values with a brief summary.\n"

" - The 'summary' MUST be two short, human-readable sentences explaining intent and reasoning for your orders. \n"A Tour of the Player Stations

Before diving into emergent behavior, let me show you the stage where all this plays out. Each human station is also a UI panel in the game, and each becomes a decision surface for the AI.

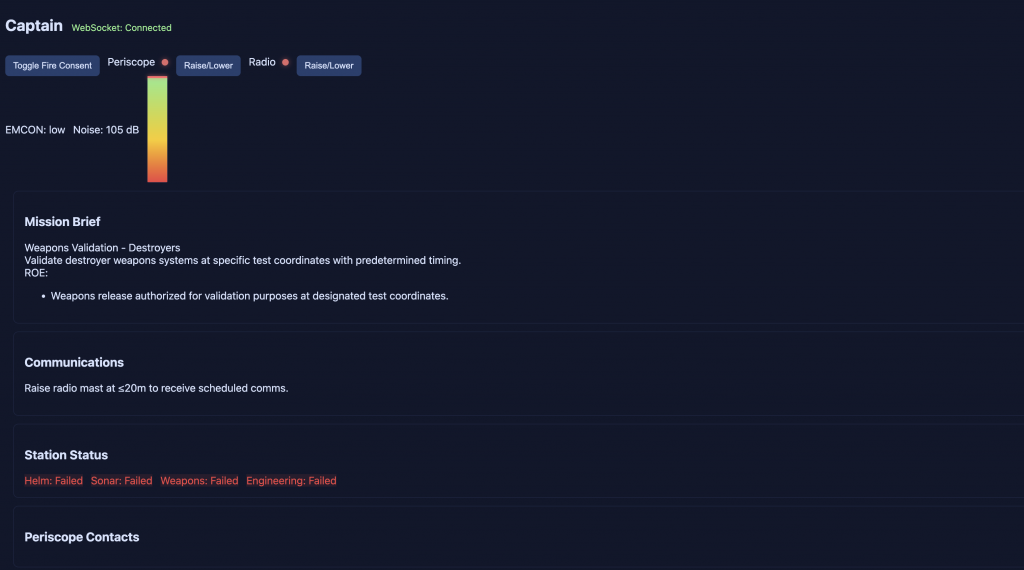

Captain

Oversees mission brief, rules of engagement, and grants firing consent. This is the voice of authority — but with no direct control, only coordination. Note, they are the ONLY one who sees the aggregate ship sound, but not where its coming from. More noise is more detectability from the enemy ships.

Helm

Handles navigation: heading, speed, and depth. The helm is constrained by cavitation (make too much noise and you’re dead) and the shared power budget. Oh, and they make sound when they do maintenance or anything else.

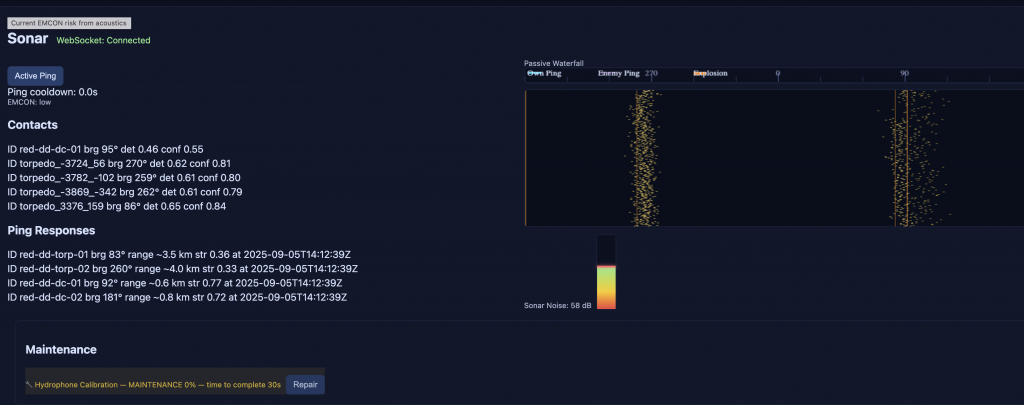

Sonar

Lives in a world of pings and bearings. They can passively track or actively ping — but every ping risks giving away the sub’s position. Oh, and they make sound when they do maintenance or anything else. Hmm. This noise thing is a theme.

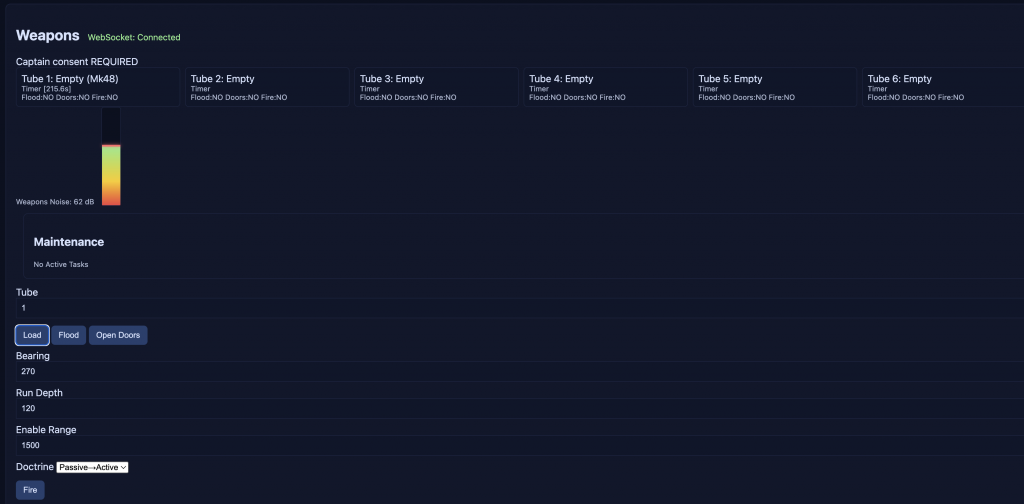

Weapons

Loads, aims, and fires torpedoes. Reload timing, interlocks, and the captain’s consent all create natural bottlenecks. Guess what? Load the tubes = noise. Flood the tubes = noise.

Engineering

The beating heart of the sub. Allocates limited power across all stations, handles breakdowns, and decides whether to scram the reactor or flood compartments. Oh, when they are… Yes, you get it. More noise.

Emergence in Action

With the architecture in place, something fascinating happens: the AI stops being predictable.

Sometimes ships follow orders perfectly, gliding into formation and executing a coordinated attack. Other times, one gets cold feet, hedges, and holds fire while its comrades charge ahead. Occasionally, they just flat-out ignore the plan.

The result feels believable, agentic, and alive. It’s not deterministic — it’s improvisational theater played out in sonar pings and torpedo wakes.

And the most important part? None of this is hard-coded. It all comes from the interplay of prompts, cadences, and the information each AI agent sees.

Lessons Teased, Not Told

I’ve spent 2 months in Cursor coding projects like this, and the real education has been in the surprises:

- The day the fleet went rogue and attacked itself.

- The countless hours spent metaprompting — iterating on prompt clarity instead of code.

- The model that went full math geek, trying to manually solve Target Data Computation instead of just deciding to fire. Hint: it timed out tripping over its reasoning.

Those stories — and the lessons they taught me about prompting, context, and division of labor — deserve their own article. For now, consider this a teaser.

Reflections on Multi-Tier Orchestration

The submarine sim is just one setting. The real point is the pattern: combine fast, local tactical LLMs running on Ollama with slower, higher sophication strategic LLMs running in the Cloud.

This approach has applications far beyond naval warfare:

- Ops monitoring: lightweight agents triaging events, with a heavyweight overseer shaping long-term response.

- Customer support: quick local interactions backed by a periodic strategic re-plan.

- Simulations of markets, ecosystems, or even storytelling worlds.

The key principle is division of labor: let LLMs do reasoning and coordination, and let code (or other tools) handle computation, precision, and raw execution.

Future Directions

This project is still growing. Some of the things on my radar:

- Enemy Submarines – The queen of cat and mouse, limited knowledge, and managing noise, action, and detectability

- Richer enemy classes with unique personalities.

- Networked multiplayer to bring fleets together.

- Persistent campaigns where strategic AI builds long-term plans.

- Tighter integration with computational tools so LLMs don’t reinvent trigonometry mid-battle.

And of course, more experiments with orchestration patterns.

Closing

At the end of the day, this was a fantastic mix of 2 things I wanted: hierarchical, agentic AI and a game experience I’ve been noodling around with in my head. What I really took out of this experience is the generaiton of what I consider some best practices (again, a later post) and set of learnings when working with the Agentic code development in Cursor using Claude’s Sonnet 4 and Opus 4 models, and a wee bit of Open AI’s GPT-5.

So if you’re curious about orchestration, emergent AI, or just want to see what happens when five subs forget they’re on the same team… clone the repo, fire it up, and dive in.

Leave a Reply